Adolf Hohl

Efficient deployment and inference of GPU-accelerated LLMs

#1about 2 minutes

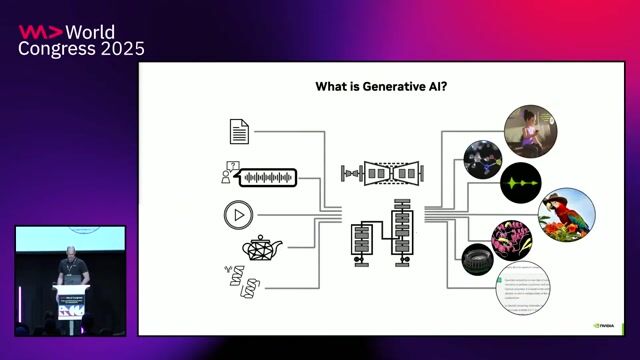

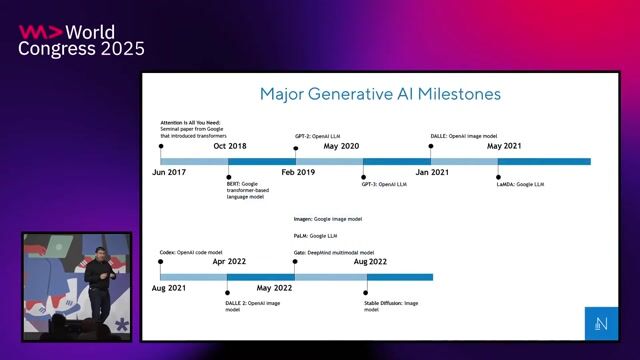

The evolution of generative AI from experimentation to production

Generative AI has rapidly moved from experimentation with models like Llama and Mistral to production-ready applications in 2024.

#2about 3 minutes

Comparing managed AI services with the DIY approach

Managed services offer ease of use but limited control, while a do-it-yourself approach provides full control but introduces significant complexity.

#3about 4 minutes

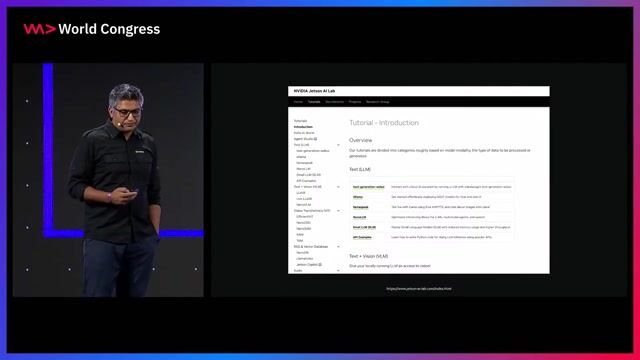

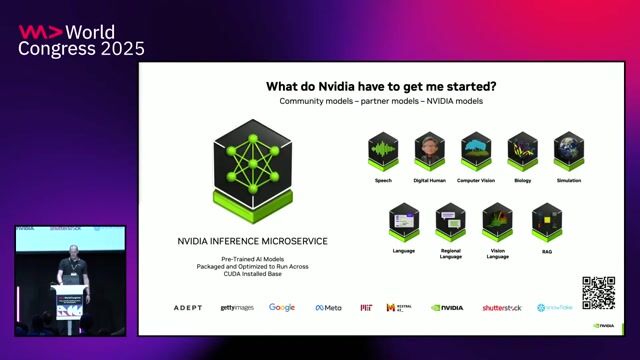

Introducing NVIDIA NIM for simplified LLM deployment

NVIDIA Inference Microservices (NIM) provide a containerized, OpenAI-compatible solution for deploying models anywhere with enterprise support.

#4about 2 minutes

Boosting inference throughput with lower precision quantization

Using lower precision formats like FP8 dramatically increases model inference throughput, providing more performance for the same hardware investment.

#5about 2 minutes

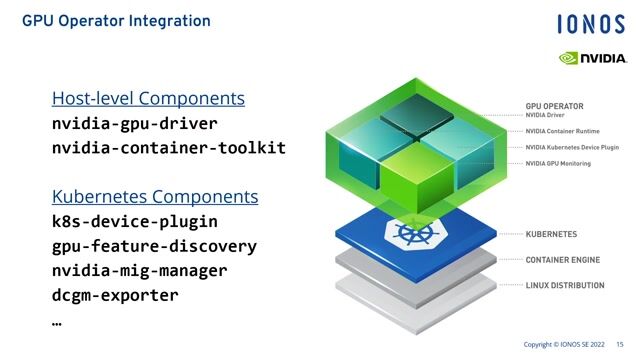

Overview of the NVIDIA AI Enterprise software platform

The NVIDIA AI Enterprise platform is a cloud-native software stack that abstracts away low-level complexities like CUDA to streamline AI pipeline development.

#6about 2 minutes

A look inside the NIM container architecture

NIM containers bundle optimized inference tools like TensorRT-LLM and Triton Inference Server to accelerate models on specific GPU hardware.

#7about 3 minutes

How to run and interact with a NIM container

A NIM container can be launched with a simple Docker command, automatically discovering hardware and exposing OpenAI-compatible API endpoints for interaction.

#8about 2 minutes

Efficiently serving custom models with LoRA adapters

NIM enables serving multiple customized LoRA adapters on a single base model simultaneously, saving memory while providing distinct model endpoints.

#9about 3 minutes

How NIM automatically handles hardware and model optimization

NIM simplifies deployment by automatically selecting the best pre-compiled model based on the detected GPU architecture and user preference for latency or throughput.

Related jobs

Jobs that call for the skills explored in this talk.

Matching moments

02:16 MIN

Deploying models with TensorRT and Triton Inference Server

Trends, Challenges and Best Practices for AI at the Edge

02:14 MIN

Deploying enterprise AI applications with NVIDIA NIM

WWC24 - Ankit Patel - Unlocking the Future Breakthrough Application Performance and Capabilities with NVIDIA

03:08 MIN

Deploying and scaling models with NVIDIA NIM on Kubernetes

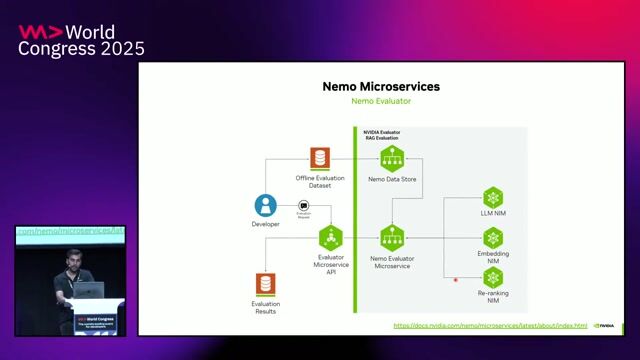

LLMOps-driven fine-tuning, evaluation, and inference with NVIDIA NIM & NeMo Microservices

01:37 MIN

Introduction to large-scale AI infrastructure challenges

Your Next AI Needs 10,000 GPUs. Now What?

01:32 MIN

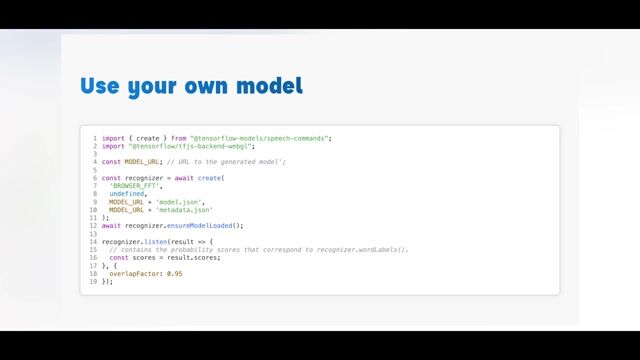

The technical challenges of running LLMs in browsers

From ML to LLM: On-device AI in the Browser

05:47 MIN

Using NVIDIA NIMs and blueprints to deploy models

Your Next AI Needs 10,000 GPUs. Now What?

03:08 MIN

Deploying custom inference workloads with NVIDIA NIMs

From foundation model to hosted AI solution in minutes

12:42 MIN

Running large language models locally with Web LLM

Generative AI power on the web: making web apps smarter with WebGPU and WebNN

Featured Partners

Related Videos

29:52

29:52Your Next AI Needs 10,000 GPUs. Now What?

Anshul Jindal & Martin Piercy

29:20

29:20LLMOps-driven fine-tuning, evaluation, and inference with NVIDIA NIM & NeMo Microservices

Anshul Jindal

30:04

30:04Self-Hosted LLMs: From Zero to Inference

Roberto Carratalá & Cedric Clyburn

22:07

22:07WWC24 - Ankit Patel - Unlocking the Future Breakthrough Application Performance and Capabilities with NVIDIA

Ankit Patel

31:25

31:25Unveiling the Magic: Scaling Large Language Models to Serve Millions

Patrick Koss

31:50

31:50Unlocking the Power of AI: Accessible Language Model Tuning for All

Cedric Clyburn & Legare Kerrison

28:38

28:38Exploring LLMs across clouds

Tomislav Tipurić

25:35

25:35Adding knowledge to open-source LLMs

Sergio Perez & Harshita Seth

Related Articles

View all articles.gif?w=240&auto=compress,format)

.gif?w=240&auto=compress,format)

From learning to earning

Jobs that call for the skills explored in this talk.

Peter Park System GmbH

München, Germany

Intermediate

Senior

Bash

Linux

Python

Peter Park System GmbH

München, Germany

Senior

Python

Docker

Node.js

JavaScript