Ankit Patel

WWC24 - Ankit Patel - Unlocking the Future Breakthrough Application Performance and Capabilities with NVIDIA

#1about 3 minutes

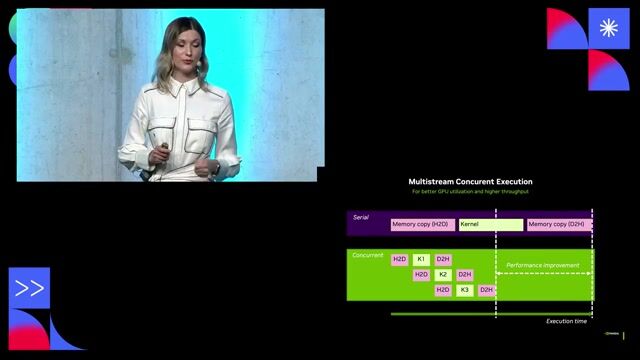

Understanding accelerated computing and GPU parallelism

Accelerated computing offloads parallelizable tasks from the CPU to specialized GPU cores, executing them simultaneously for a massive speedup.

#2about 2 minutes

Calculating the cost and power savings of GPUs

While a GPU-accelerated system costs more upfront, it can replace hundreds of CPU systems for parallel workloads, leading to significant cost and power savings.

#3about 4 minutes

Using NVIDIA libraries to easily accelerate applications

NVIDIA provides domain-specific libraries like cuDF that allow developers to accelerate their code, such as pandas dataframes, with minimal changes.

#4about 3 minutes

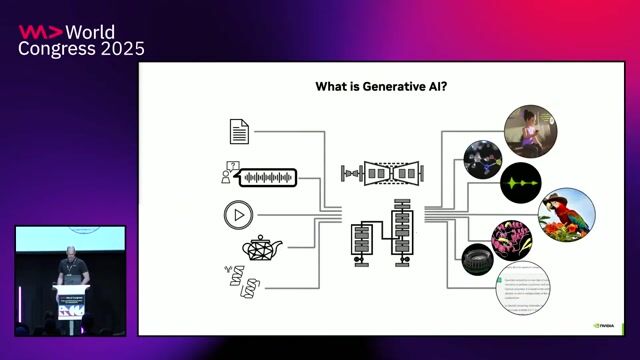

Shifting from traditional code to AI-powered logic

Modern AI development replaces complex, hard-coded logic with prompts to large language models, changing how developers implement functions like sentiment analysis.

#5about 3 minutes

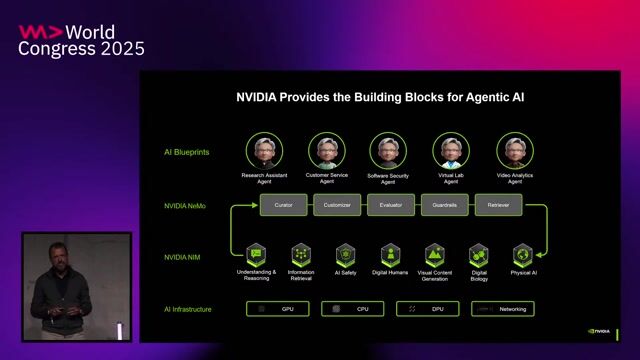

Composing multiple AI models for complex tasks

Developers can now create sophisticated applications by chaining multiple AI models together, such as using a vision model's output to trigger an LLM that calls a tool.

#6about 2 minutes

Deploying enterprise AI applications with NVIDIA NIM

NVIDIA NIM provides enterprise-grade microservices for deploying AI models with features like runtime optimization, stable APIs, and Kubernetes integration.

#7about 4 minutes

Accessing NVIDIA's developer programs and training

NVIDIA offers a developer program with access to libraries, NIMs for local development, and free training courses through the Deep Learning Institute.

Related jobs

Jobs that call for the skills explored in this talk.

Matching moments

05:12 MIN

Boosting Python performance with the Nvidia CUDA ecosystem

The weekly developer show: Boosting Python with CUDA, CSS Updates & Navigating New Tech Stacks

01:40 MIN

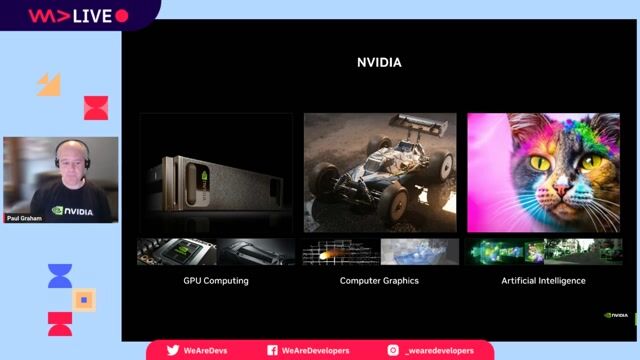

The rise of general-purpose GPU computing

Accelerating Python on GPUs

01:04 MIN

NVIDIA's platform for the end-to-end AI workflow

Trends, Challenges and Best Practices for AI at the Edge

01:37 MIN

Introduction to large-scale AI infrastructure challenges

Your Next AI Needs 10,000 GPUs. Now What?

01:57 MIN

Highlighting impactful contributions and the rise of open models

Open Source: The Engine of Innovation in the Digital Age

03:22 MIN

Using NVIDIA's full-stack platform for developers

Pioneering AI Assistants in Banking

02:21 MIN

How GPUs evolved from graphics to AI powerhouses

Accelerating Python on GPUs

02:44 MIN

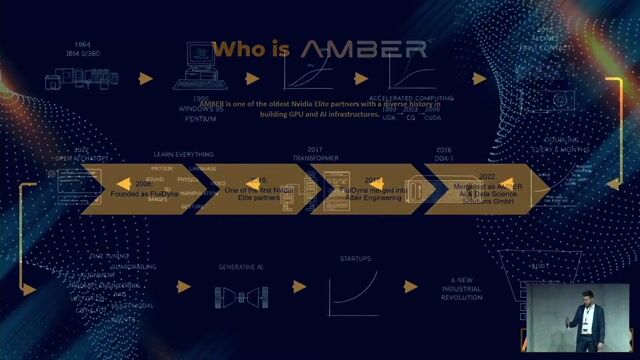

Key milestones in the evolution of AI and GPU computing

AI Factories at Scale

Featured Partners

Related Videos

29:52

29:52Your Next AI Needs 10,000 GPUs. Now What?

Anshul Jindal & Martin Piercy

35:16

35:16How AI Models Get Smarter

Ankit Patel

32:27

32:27Generative AI power on the web: making web apps smarter with WebGPU and WebNN

Christian Liebel

22:18

22:18Accelerating Python on GPUs

Paul Graham

30:46

30:46The Future of Computing: AI Technologies in the Exascale Era

Stephan Gillich, Tomislav Tipurić, Christian Wiebus & Alan Southall

24:26

24:26AI Factories at Scale

Thomas Schmidt

23:01

23:01Efficient deployment and inference of GPU-accelerated LLMs

Adolf Hohl

26:25

26:25Bringing AI Everywhere

Stephan Gillich

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Squarepoint Capital

London, United Kingdom

Intermediate

gRPC

Linux

Kotlin

PyTorch

PostgreSQL

+3

Amdocs

Kontich, Belgium

Senior

Terraform

Kubernetes

Machine Learning

Continuous Integration

Samsung Group

Cambridge, United Kingdom

Remote

PyTorch

Tensorflow

Computer Vision

Machine Learning

Association Bernard Gregory

Canton de Nancy-2, France

Data analysis

Machine Learning

NLP People

Municipality of Valencia, Spain

Intermediate

GIT

Linux

NoSQL

NumPy

Keras

+11

NLP People

Municipality of Valencia, Spain

Intermediate

NumPy

Keras

Pandas

PyTorch

Tensorflow

+3