Natan Silnitsky

Advanced Caching Patterns used by 2000 microservices

#1about 7 minutes

Why caching is critical for services at scale

Caching reduces latency, lowers infrastructure costs, and improves reliability by making services less dependent on databases or third-party services.

#2about 1 minute

Knowing when not to implement a cache

Avoid adding a cache prematurely for young products with low traffic, as it introduces unnecessary complexity, potential bugs, and additional failure points.

#3about 4 minutes

Caching critical configuration with an S3-backed cache

Use a read-through cache backed by S3 to store static, rarely updated configuration data, ensuring service startup reliability even when dependencies are down.

#4about 6 minutes

Building a dynamic LRU cache with DynamoDB and CDC

Implement a cache-aside pattern using an in-memory LRU cache backed by DynamoDB and populated via Kafka CDC streams to reduce database load for frequently accessed data.

#5about 5 minutes

Using Kafka compact topics for in-memory datasets

For smaller datasets, use Kafka's compact topics to maintain a complete, up-to-date copy of the data in-memory for each service instance.

#6about 6 minutes

Implementing an HTTP reverse proxy cache with Varnish

Use a reverse proxy like Varnish Cache with a robust invalidation strategy to dramatically reduce response times for services with expensive computations like server-side rendering.

#7about 4 minutes

A decision tree for choosing the right caching pattern

Follow a simple flowchart to select the appropriate caching strategy based on whether the data is for startup, dynamic retrieval, or stable HTTP responses.

#8about 12 minutes

Q&A on caching strategies and implementation details

The discussion covers HTTP header caching, custom invalidation logic, handling the "thundering herd" problem, and the choice of JVM for high-performance services.

Related jobs

Jobs that call for the skills explored in this talk.

Matching moments

02:46 MIN

Moving to the cloud and implementing Varnish cache

Scaling: from 0 to 20 million users

03:00 MIN

Implementing resilience patterns like caching and fallbacks

Microservices with Micronaut

01:31 MIN

Optimizing cache efficiency with a dedicated sharded layer

Scaling: from 0 to 20 million users

02:44 MIN

Using distributed caches to reduce database load

In-Memory Computing - The Big Picture

03:41 MIN

Reducing server load with build steps and caching

Sleek, Swift, and Sustainable: Optimizations every web developer should consider

05:27 MIN

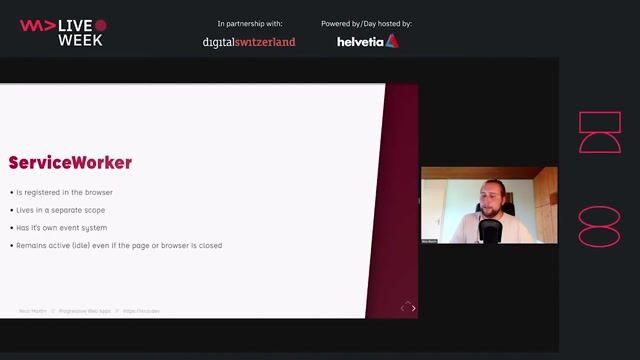

Implementing caching strategies with service workers and Workbox

Progressive Web Apps - The next big thing

05:05 MIN

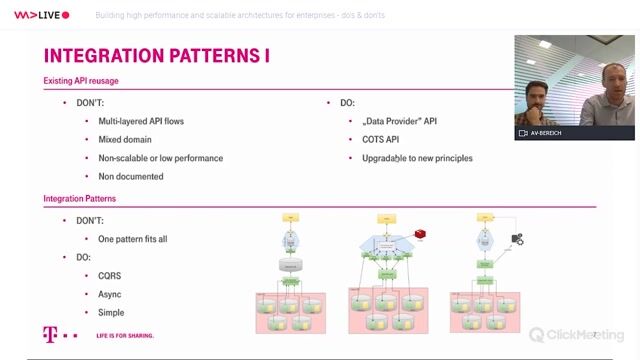

Applying patterns for data replication, caching, and commands

Building high performance and scalable architectures for enterprises

03:38 MIN

Q&A on cache strategies and dynamic content

Offline first!

Featured Partners

Related Videos

28:12

28:12Scaling: from 0 to 20 million users

Josip Stuhli

30:27

30:27HTTP headers that make your website go faster

Thijs Feryn

30:36

30:36In-Memory Computing - The Big Picture

Markus Kett

46:24

46:24The Rise of Reactive Microservices

David Leitner

22:32

22:32Swapping Low Latency Data Storage Under High Load

George Asafev

36:58

36:58Event based cache invalidation in GraphQL

Simone Sanfratello

29:14

29:14Sleek, Swift, and Sustainable: Optimizations every web developer should consider

Andreas Taranetz

49:52

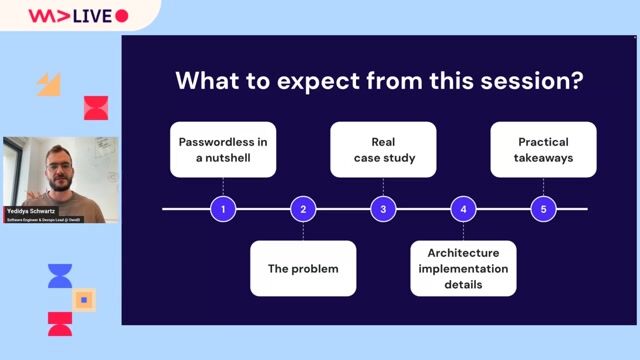

49:52Accelerating Authentication Architecture: Taking Passwordless to the Next Level

Yedidya Schwartz

Related Articles

View all articles.gif?w=240&auto=compress,format)

From learning to earning

Jobs that call for the skills explored in this talk.

AUTO1 Group SE

Berlin, Germany

Intermediate

Senior

ELK

Terraform

Elasticsearch

doinstruct Software GmbH

Berlin, Germany

Intermediate

Senior

Node.js

Speech Processing Solutions

Vienna, Austria

Intermediate

CSS

HTML

JavaScript

TypeScript

Peter Park System GmbH

München, Germany

Senior

Python

Docker

Node.js

JavaScript

M&M Software GmbH

Sankt Georgen im Schwarzwald, Germany

Intermediate

Senior

Docker

denkwerk GmbH

Köln, Germany

Intermediate

Senior

NestJS

Kubernetes