WAD

TEST

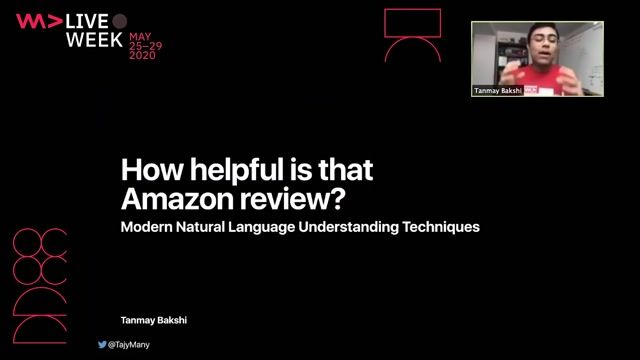

#1about 7 minutes

Quantifying the usefulness of an Amazon review

A neural network ranks Amazon reviews by assigning a continuous scalar value that represents helpfulness, trained on user feedback data.

#2about 6 minutes

How neural networks learn features in embedding spaces

Deep neural networks project input data into an embedding space where it becomes linearly separable for a final classifier.

#3about 10 minutes

The evolution and limitations of traditional NLP models

Techniques like Word2Vec create semantic vectors for words, but recurrent neural networks (RNNs) that use them are slow, non-parallelizable, and domain-specific.

#4about 8 minutes

How BERT and transformers solve core NLP challenges

The BERT model, built on the transformer architecture, is domain-independent, parallelizable, and understands word context by using a masked language modeling training approach.

#5about 7 minutes

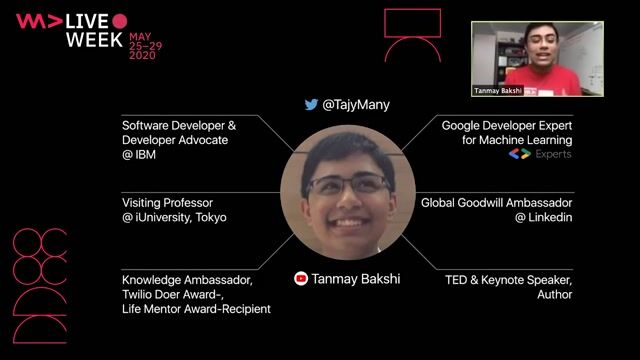

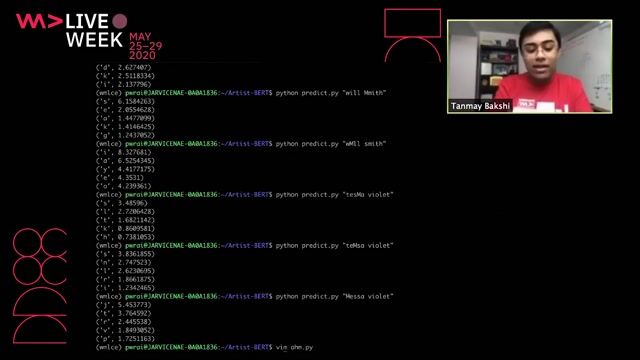

Building a character-level model with self-attention

A custom 'Artist BERT' model demonstrates how self-attention layers can predict masked characters in a sequence by understanding positional and contextual information.

#6about 8 minutes

Training a model to rank reviews with relative values

The review ranker is trained as a classification problem on pairs of reviews, forcing it to learn a scalar helpfulness value without being given absolute scores.

Related jobs

Jobs that call for the skills explored in this talk.

Matching moments

06:40 MIN

Ranking Amazon reviews by learning a quality score

Ranking Amazon Reviews by Quality with Pointwise Ratings learned from Pairwise Data

08:25 MIN

From Word2Vec and LSTMs to modern transformers

What do language models really learn

07:44 MIN

Training a pointwise ranker from pairwise review data

Ranking Amazon Reviews by Quality with Pointwise Ratings learned from Pairwise Data

02:59 MIN

Highlighting successful applications of deep learning

The pitfalls of Deep Learning - When Neural Networks are not the solution

01:54 MIN

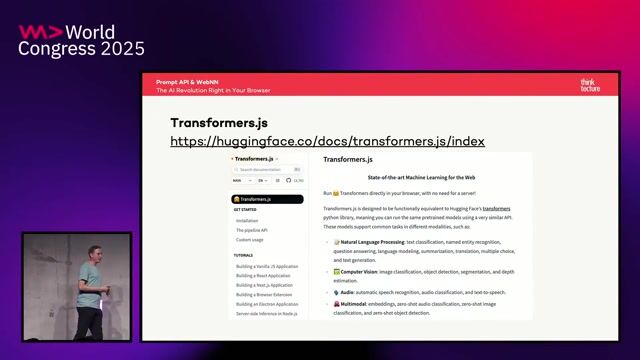

Exploring diverse ML workloads with Transformers.js

Prompt API & WebNN: The AI Revolution Right in Your Browser

05:33 MIN

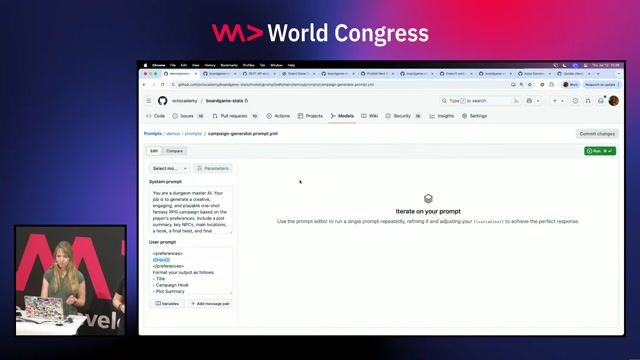

Using evaluators to compare AI model variants

Bringing AI Model Testing and Prompt Management to Your Codebase with GitHub Models

08:06 MIN

Introducing the BERT and transformer architecture

Ranking Amazon Reviews by Quality with Pointwise Ratings learned from Pairwise Data

02:52 MIN

The role of transformers and the attention mechanism

AI'll Be Back: Generative AI in Image, Video, and Audio Production

Featured Partners

Related Videos

47:26

47:26Ranking Amazon Reviews by Quality with Pointwise Ratings learned from Pairwise Data

Tanmay Bakshi

21:01

21:01Hybrid AI: Next Generation Natural Language Processing

Jan Schweiger

27:23

27:23How We Built a Machine Learning-Based Recommendation System (And Survived to Tell the Tale)

Dora Petrella

26:54

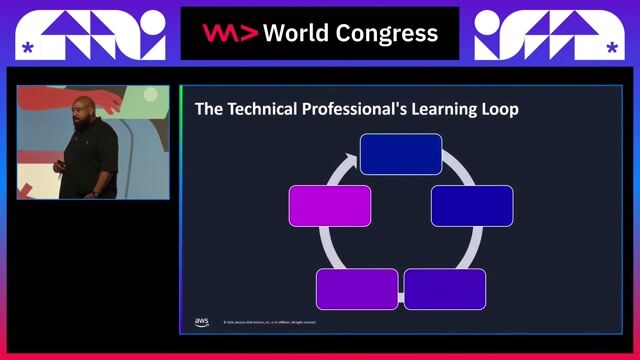

26:54Make it simple, using generative AI to accelerate learning

Duan Lightfoot

28:07

28:07How we built an AI-powered code reviewer in 80 hours

Yan Cui

22:07

22:07WWC24 - Ankit Patel - Unlocking the Future Breakthrough Application Performance and Capabilities with NVIDIA

Ankit Patel

26:30

26:30Three years of putting LLMs into Software - Lessons learned

Simon A.T. Jiménez

22:11

22:11How computers learn to see – Applying AI to industry

Antonia Hahn

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Infosupport

Veenendaal, Netherlands

€0K

Natural Language Processing

Understanding Recruitment Group

Barcelona, Spain

Remote

Node.js

Computer Vision

Machine Learning

NLP People

Municipality of Valencia, Spain

Intermediate

GIT

Linux

NoSQL

NumPy

Keras

+11