Christian Liebel

Prompt API & WebNN: The AI Revolution Right in Your Browser

#1about 3 minutes

The case for running AI models locally

Cloud-based AI has drawbacks like offline limitations, capacity issues, data privacy concerns, and subscription costs, creating an opportunity for local, on-device models.

#2about 2 minutes

Two primary approaches for browser-based AI

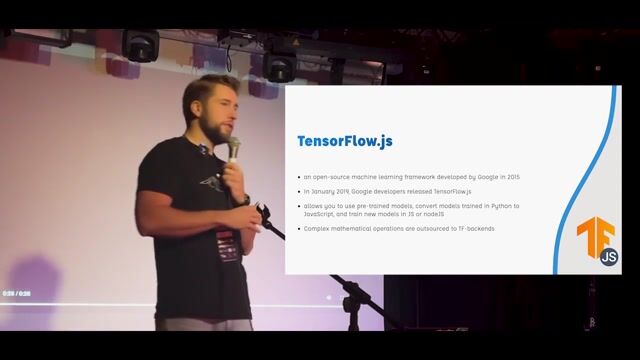

The W3C is exploring two main approaches for on-device AI: "Bring Your Own AI" libraries like WebLLM and low-level APIs like WebNN, alongside experimental "Built-in AI" APIs like the Prompt API.

#3about 3 minutes

Running large language models with WebLLM

The WebLLM library uses WebGPU to download and run open-weight large language models directly in the browser's cache storage, enabling offline chat and data processing.

#4about 1 minute

Solving the model size and storage problem

Large AI models create a storage problem due to browser origin isolation, leading to a proposal for a Cross Origin Storage API to allow models to be shared across different websites.

#5about 2 minutes

Exploring diverse ML workloads with Transformers.js

The Transformers.js library enables various on-device machine learning tasks beyond text generation, such as computer vision and audio processing, as shown in a sketch recognition game.

#6about 4 minutes

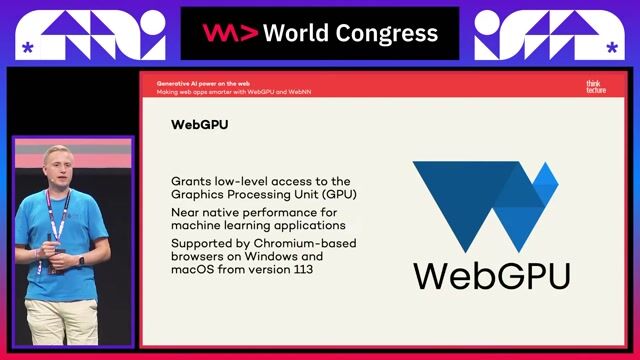

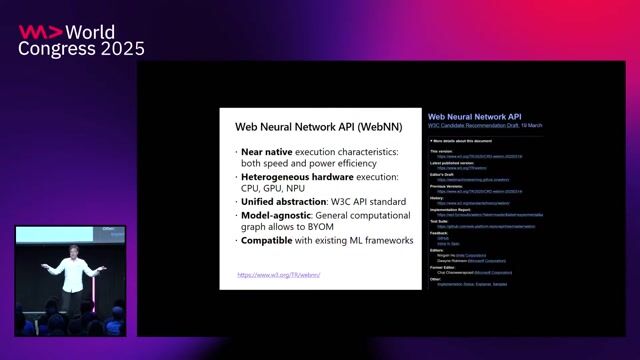

Accelerating performance with the WebNN API

The upcoming Web Neural Network (WebNN) API provides direct access to specialized hardware like NPUs, offering a significant performance increase for ML tasks compared to CPU or GPU processing.

#7about 3 minutes

The alternative: Built-in AI and the Prompt API

Google Chrome's experimental built-in AI initiative solves model sharing and performance issues by providing standardized APIs that use a single, browser-managed model like Gemini Nano.

#8about 4 minutes

Exploring the built-in AI API suite

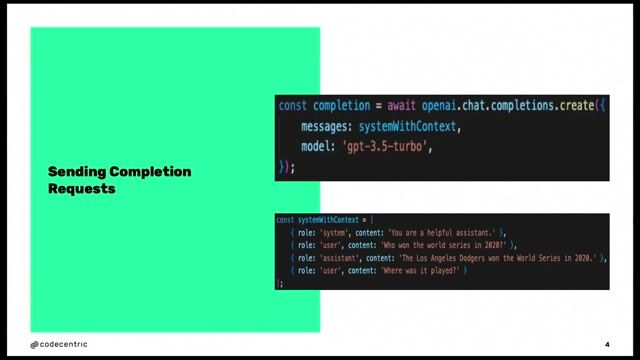

A demonstration of the built-in AI APIs shows how to use the summarizer, language detector, and Prompt API for general LLM tasks directly from JavaScript in the browser.

#9about 4 minutes

Practical use cases for on-device AI

On-device AI can enhance web applications with features like an offline-capable chatbot in an Angular app or a smart form filler that automatically categorizes and inputs user data.

#10about 3 minutes

Building real-time conversational agents

Demonstrations of a multimodal insurance form assistant and a simple on-device conversational agent highlight the potential for creating interactive, real-time user experiences with local AI.

#11about 1 minute

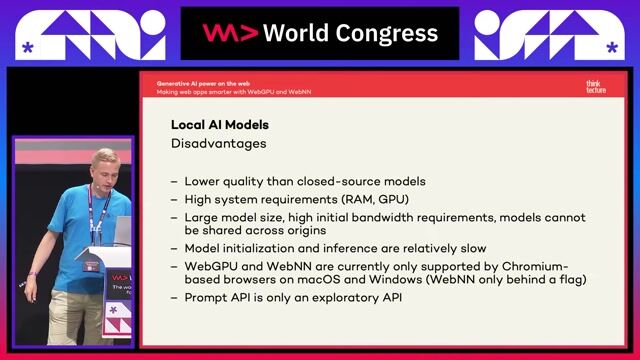

Weighing the pros and cons of on-device AI

On-device AI offers significant advantages in privacy, availability, and cost, but developers must consider the trade-offs in model capability, response quality, and system requirements compared to cloud solutions.

Related jobs

Jobs that call for the skills explored in this talk.

Matching moments

03:24 MIN

Running on-device AI in the browser with Gemini Nano

Exploring Google Gemini and Generative AI

01:14 MIN

The future of on-device AI in web development

Generative AI power on the web: making web apps smarter with WebGPU and WebNN

02:08 MIN

The future of on-device AI hardware and APIs

From ML to LLM: On-device AI in the Browser

02:20 MIN

The technology behind in-browser AI execution

Generative AI power on the web: making web apps smarter with WebGPU and WebNN

03:35 MIN

Boosting performance with the upcoming WebNN API

Generative AI power on the web: making web apps smarter with WebGPU and WebNN

02:51 MIN

Introducing the Web Neural Network (WebNN) standard

Privacy-first in-browser Generative AI web apps: offline-ready, future-proof, standards-based

03:28 MIN

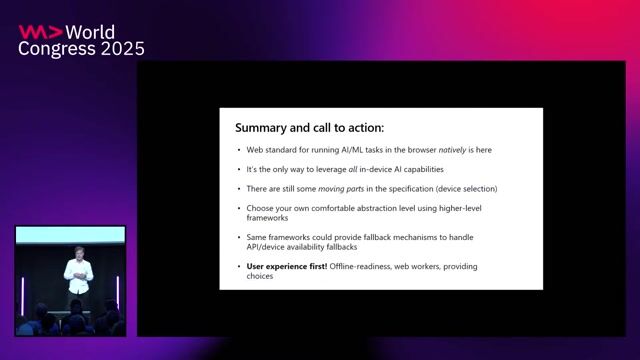

Best practices and the future of browser AI

Privacy-first in-browser Generative AI web apps: offline-ready, future-proof, standards-based

01:55 MIN

Key benefits of running AI in the browser

From ML to LLM: On-device AI in the Browser

Featured Partners

Related Videos

32:27

32:27Generative AI power on the web: making web apps smarter with WebGPU and WebNN

Christian Liebel

30:13

30:13Privacy-first in-browser Generative AI web apps: offline-ready, future-proof, standards-based

Maxim Salnikov

27:23

27:23From ML to LLM: On-device AI in the Browser

Nico Martin

33:51

33:51Exploring the Future of Web AI with Google

Thomas Steiner

25:47

25:47Performant Architecture for a Fast Gen AI User Experience

Nathaniel Okenwa

32:26

32:26Bringing the power of AI to your application.

Krzysztof Cieślak

31:12

31:12Using LLMs in your Product

Daniel Töws

30:04

30:04Self-Hosted LLMs: From Zero to Inference

Roberto Carratalá & Cedric Clyburn

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Speech Processing Solutions

Vienna, Austria

Intermediate

CSS

HTML

JavaScript

TypeScript

Lotum media GmbH

Bad Nauheim, Germany

Senior

Node.js

JavaScript

TypeScript

Commerz Direktservice GmbH

Duisburg, Germany

Intermediate

Senior

JO Media Software Solutions GmBh

Brunn am Gebirge, Austria

Senior

CSS

Angular

JavaScript

TypeScript

webLyzard

Vienna, Austria

DevOps

Docker

PostgreSQL

Kubernetes

Elasticsearch

+2