Daniel Töws

Using LLMs in your Product

#1about 2 minutes

Three pillars for integrating LLMs in products

The talk will cover three key areas for product integration: using the API, mastering prompt engineering, and implementing function calls for external data.

#2about 3 minutes

Making your first OpenAI API chat completion call

This section covers the basic code structure for making a chat completion request, including the different message roles and the stateless nature of the API.

#3about 3 minutes

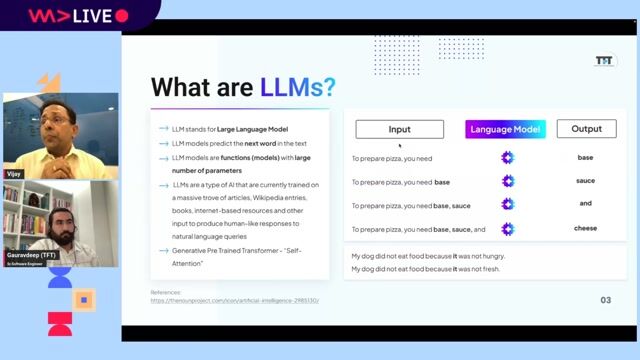

Choosing the right LLM for your use case

Key factors for selecting a model include its training dataset cutoff date, context length measured in tokens, and the number of model parameters.

#4about 5 minutes

Best practices for effective prompt engineering

Improve LLM outputs by writing clear instructions, providing context with personas and references, and breaking down complex tasks into smaller steps.

#5about 4 minutes

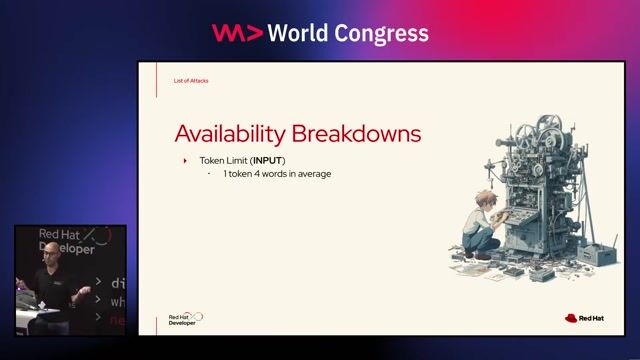

Understanding and defending against prompt injection

Prevent users from bypassing system instructions by reinforcing the original rules with a post-prompt at the end of the message history.

#6about 4 minutes

Giving LLMs new abilities with function calling

Function calling allows the LLM to request help from your own code to access external data or perform actions like searching a database.

#7about 2 minutes

Summary and resources for further learning

The talk concludes with a recap of core concepts and provides resources for advanced prompting techniques and retrieval-augmented generation (RAG).

#8about 7 minutes

Audience Q&A on practical LLM implementation

The Q&A covers practical concerns like managing context length, prompt testing costs, implementing function call logic, and ensuring reliable JSON output.

Related jobs

Jobs that call for the skills explored in this talk.

Matching moments

02:35 MIN

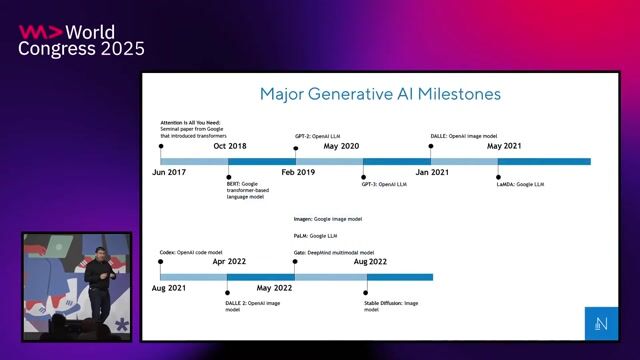

The rapid evolution and adoption of LLMs

Building Blocks of RAG: From Understanding to Implementation

01:40 MIN

Using large language models to improve customer support

Unlocking the potential of Digital & IT at Vodafone

03:30 MIN

Using large language models for voice-driven development

Speak, Code, Deploy: Transforming Developer Experience with Voice Commands

04:55 MIN

Building custom applications with the OpenAI chat API

Develop AI-powered Applications with OpenAI Embeddings and Azure Search

03:42 MIN

Using large language models as a learning tool

Google Gemini: Open Source and Deep Thinking Models - Sam Witteveen

04:08 MIN

Integrating generative AI for dynamic responses

Creating bots with Dialogflow CX

07:44 MIN

Defining key GenAI concepts like GPT and LLMs

Enter the Brave New World of GenAI with Vector Search

02:26 MIN

Understanding the core capabilities of large language models

Data Privacy in LLMs: Challenges and Best Practices

Featured Partners

Related Videos

26:30

26:30Three years of putting LLMs into Software - Lessons learned

Simon A.T. Jiménez

32:26

32:26Bringing the power of AI to your application.

Krzysztof Cieślak

26:54

26:54Make it simple, using generative AI to accelerate learning

Duan Lightfoot

33:28

33:28Prompt Engineering - an Art, a Science, or your next Job Title?

Maxim Salnikov

58:00

58:00Creating Industry ready solutions with LLM Models

Vijay Krishan Gupta & Gauravdeep Singh Lotey

28:38

28:38Exploring LLMs across clouds

Tomislav Tipurić

29:50

29:50OpenAPI meets OpenAI

Christopher Walles

29:00

29:00Beyond the Hype: Building Trustworthy and Reliable LLM Applications with Guardrails

Alex Soto

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Commerz Direktservice GmbH

Duisburg, Germany

Intermediate

Senior

SMG Swiss Marketplace Group

Canton de Valbonne, France

Senior

CGI Group Inc.

Köln, Germany

Senior

Data analysis

Natural Language Processing